I’ll try to answer:

- I suspect your NDVI layer in dashboard doesn’t have the

dataMask output, which is a mandatory output of evalscript for it to be useful for Statistical API. Because of this requirement, the result of the evaluatePixel function should then be a dictionary; all the other outputs could be in the default output, but at least personally, I like the named outputs.

Rather simple, but “explicit” evalscript is this one:

//VERSION=3

function setup() {

return {

input: {

bands: "B04", "B08", "dataMask"],

units: "DN"

}],

output: o

{

id: "bands",

bands: "B04", "B08"],

sampleType: "UINT16"

},

{

id: "index",

bands: "NDVI"],

sampleType: "FLOAT32"

},

{

id: "dataMask",

bands: 1

}]

}

}

function evaluatePixel(samples) {

let NDVI = index(samples.B08, samples.B04);

return {

bands: samples.B04, samples.B08],

index: NDVI],

dataMask: tsamples.dataMask]

};

}

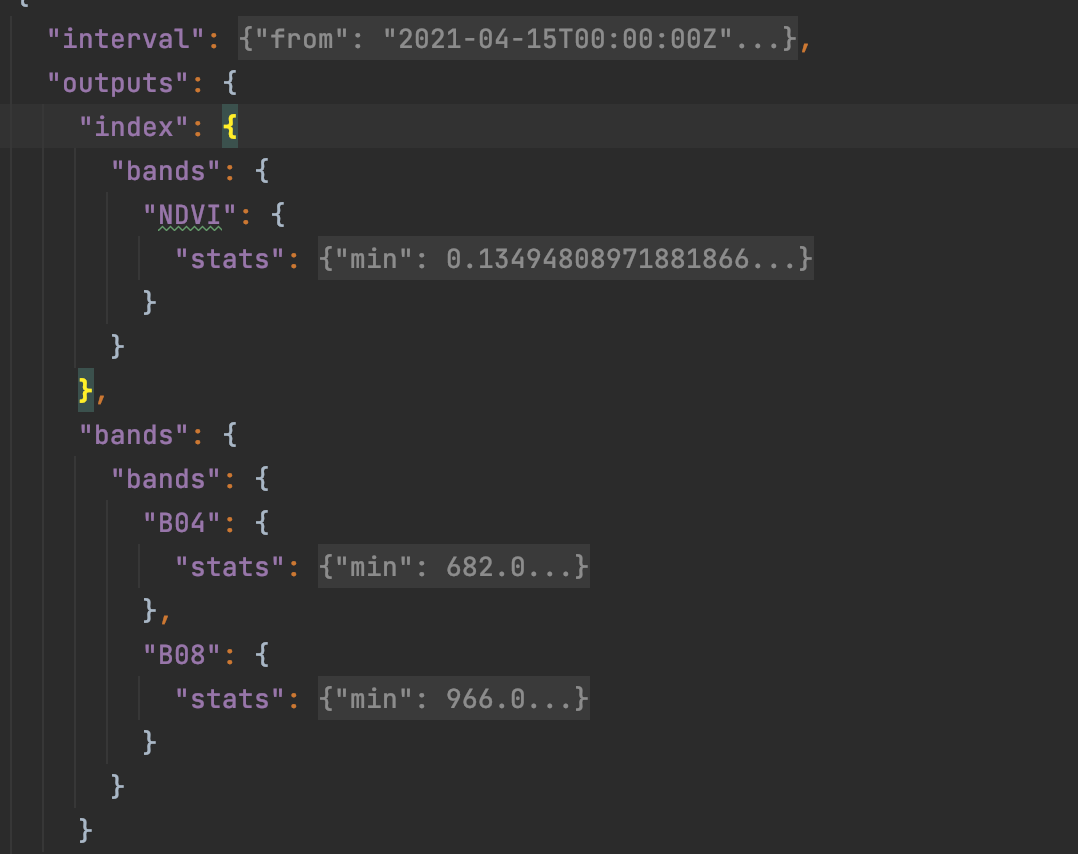

which results in the output of the Statistical API with named outputs and named “bands” of the outputs as well, e.g.:

- According to API docs, the input>bounds parameters of the Statistical API request are the same as for Process API. Please see docs for details (the docs also state resolution: Spatial resolution used to calculate the width of the sample matrix from the

input.bounds . Its units are defined by the CRS given in input.bounds.properties.crs parameter.`)

2c) I’d say it depends on your case. If you want to get a rough statistics of large areas, then absolutely, reduce the resolution substantially: you will get “good enough” stats much faster. You can always run an experiment to find the sweet spot.

I’ll have to come back to you with answers for 2a and 2b. I have to check myself

2a) if neither res_x/res_y nor width/height parameters are specified, defaults will be used: width=height=256

2b) such request will result in an error. At the moment, the error message is misleading (it will say “The bounding box area too large.”)

Thanks for the replies.

your answer to 2a explains something:

I just found I’ve burned through A LOT more PUs than I would have expected. I was making requests in EPSG 4326 and just not setting height, width, resx or resy. So it was obviously using the default. Now I reproject to a UTM zone and set resx and resy=10, each request uses about 1/6 of the PUs!

So, if one cares about the PU cost, don’t leave resx/resy un set!

Another thing to take into account is the fact that statistics of 40 pixels with 10-meter resoution will be similar to statistics of 10 pixels with 20-meter resolution (over the same area), yet you will use 4-times less PUs. Therefore, if you are performing your analysis over the large area, make sure to down-scale, unless you are really certain you want to do it at full-res.

Thanks for the tip Grega, but maybe you can illuminate something:

None of this shows up in the usage history yet, so I can only estimate the PU cost by looking at my monthly PU remaining quota.

I have a test request. At 10 meters, the PU quota goes down by 10, then a few seconds later, goes down by about another 1 PU.

At 20 meters, it goes down by 10 PU, then goes up again by about 8 PUs (so the overall cost is about 2-3 PUs).

Same happens at 30 meters, it geos down by 10 PUs, then seems to “refund” some.

Because of this, I initially thought that all resolutions had the same PU cost…

What’s going on?

Also, this service is really great (especially now that I’m using ca.1/20 of the PUs I was originally!)!

As a general note - the PU calculation of this service should be exactly the same as for process API (e.g. if you would have done individual process API requests to get the data, then calculate the statistics yourself).

Your account (I am assuming you are using user id 1cb70d3a-MASKED) is set to 30.000 processing units per month. this means that every 89 seconds a new PU is added to your account, making it difficult to calculate on your side on what exactly is happening. (as a side note; we will soon change this model and refill the account once per month, on 1st of the month).

You are making quite a few requests, so it is difficult to do individual analysis. However, what I notice:

- all requets are “small ones”, below the min order threshold, so being calculated as 0.01 PU in terms of “size”.

- most of the requests done in the last hour, are of 2.64 PU each

- the rest are all over the place, from 2 to 15 PU (at least those I’ve looked into)

The first bullet above is relevant as it is not in line with your finding that setting resolution does any difference (there is a chance that size is recorded wrongly in our logs, but still correctly applied in calculation).

I am guessing (as I do not have insight in details of your requests) that the variation is mostly related to number of observations and number of bands.

If you find some inconsistency, it would be great to report some more details, so that we can look into it. There is a chance that we have a bug in StatAPI PU calculation, it’s a new service.

The easiest way to provide us debug info is to create a new OAuth clientID and use that one to just make a few requests, e.g. 1 minute apart, and let us know what you have done (we do not keep all the details of your request in our logs). Then we can compare this with individual logs.

Thanks for the answers.

- Good to know it should be same PU calc as the Process API.

- Why the change to granting all PUs on 1st of month. I would vote against that if it were a democracy…

- If you were to look back at the requests before ca. 5pm yesterday, you should see they were all much larger. 2.64 is expected after I explicitly set the resolution parameters.

- I’m confused - if my requests are smaller than the min-order threshold, should or should not the resolution make a difference?

- I will do some tests with a new OAuth ClientID and let you know by email.

Thanks for the named outputs example.

If the datamask is required as a separate named output, is it possible to use data fusion with the stats API? Because then one would need a separate dataMask output for each of the data sources?

Is this possible?

Before even getting to how to handle dataMasks, I can’t even get it to work with named data sources:

e.g. having this in the request:

{

“type”: “S2L2A”,

“id”: “S2”,

“dataFilter”: {

“mosaickingOrder”: “leastCC”

},

and this in the evalscript:

input: n{

datasource: “S2”, …

I’ll email you a sample request.

Thanks

- Full refil at 1st of the month is coming as we realised that the existing system is too confusing for many users. We field questions related to this on a weekly basis. In practice it is also better for the users as it guarantees that someone can continuously consume all monthly credits, if they wishes so.

- They were indeed larger, but the “size factor” was always 0.01. It might be, though, that this is logged wrongly.

- As long as requests are smaller than min-order threshold (i.e. 51*51px), they will be counted the same. If you, however, increase resolution to e.g. 1m, the request’s size will increase. But, at least according to the logs, they never got over 0.01.

- That also means that if one accidentally burns through their credits before the month end (as just happened to me), they are completely blocked (rather than just significantly slowed down) unless they get out the credit card…

3 & 4: that’s odd as I was seeing a difference between using 10 m and 20 m - I guess all the requests you saw were 20 m and thus below the 0.01 threshold, but my occasional tests earlier at 10 m were larger than that…

Does the stats API support e.g. Landsat 8, MODIS or Sentinel 3 yet? Just experimenting and didn’t get any of them to work. With original end point, just get “bad request”. If I switch the end point (https://docs.sentinel-hub.com/api/latest/data/), I get “503 Service Unavailable”.

I’ll go ahead and answer my own question:

Hi,

Im trying to use the Statistical API to analyse Sentinel-5P data (CH4). Can you let me know if this dataset is available to access via this API and give me some advice on how this is approached.

Many Thanks

Steve

Therefore: no Landsat, MODIS or Sentinel 3 for now (except by FIS)

- There are other ways to earn PUs, i.e. by earning “love” by contributing to the community (check your Dashboard

).

).

- and 4. - the PUs do for sure depend on resolution as there are more pixels processed. But only as long as the "area’ (in pixels) is larger than 51x51px.

).

).