Hello,

I have dataframe with polygons (wgs84) and date range (in two columns-start and end).

The tbale is ismilar to this:

id start end polygon

0 2019-10-19 2020-02-14 POLYGON ((-56.76952 -13.18003, -56.77289 -13.1...

1 2019-10-10 2020-02-25 POLYGON ((-54.23731 -13.23412, -54.21453 -13.2...

....

I want to get for each polygon the mean value of bands vv and vh from sentinel-1 . The mean value should be of pixels inside the polygons and not of the whole bounding box.

As the date range is changing all the time, I was trying to run the statistic per row and not for the while geodataframe, as described in the secod option in the documentation (using the gdf for this,taking into account the polygon shape) :

evalscript = """

//VERSION=3

function setup() {

return {

input: ["VV","VH","dataMask"],

output: [{ id:"bands",bands: 2,sampleType:"FLOAT32"},{id :"dataMask",bands: 1}]

};

}

function evaluatePixel(samples) {

return {

bands: [10 * Math.log((samples.VV)+0.0001) / Math.LN10, 10 * Math.log((samples.VH)+0.0001) / Math.LN10],

dataMask:[samples.dataMask]

};

}

"""

for index, row in gdf.iterrows():

tmp=gpd.GeoDataFrame(gdf.loc[index]).T

tmp=tmp.set_geometry("polygon")

bbox_size,bbox,bbox_coords_wgs84=get_bbox_from_shape(tmp,10)

tmp.set_crs(epsg=4326, inplace=True)

start_date=tmp['start'].values[0]

end_date=tmp['Hend'].values[0]

time_interval=(start_date,end_date)

aggregation = SentinelHubStatistical.aggregation(

evalscript=evalscript, time_interval=time_interval, aggregation_interval="P1D", resolution=(10, 10)

)

input_data=[SentinelHubStatistical.input_data(DataCollection.SENTINEL1_IW)]

for geo_shape in tmp.geometry.values:

request = SentinelHubStatistical(

aggregation=aggregation,

input_data=[input_data],

geometry=Geometry(geo_shape, crs=CRS(tmp.crs)),

config=config,

)

The problem is that I get error for this:

ypeError Traceback (most recent call last)

/tmp/ipykernel_17356/1793327201.py in

39

40 for geo_shape in tmp.geometry.values:

—> 41 request = SentinelHubStatistical(

42 aggregation=aggregation,

43 input_data=[input_data],/opt/conda/envs/rs/lib/python3.8/site-packages/sentinelhub/sentinelhub_statistical.py in init(self, aggregation, input_data, bbox, geometry, calculations, **kwargs)

40 self.mime_type = MimeType.JSON

41

—> 42 self.payload = self.body(

43 request_bounds=self.bounds(bbox=bbox, geometry=geometry),

44 request_data=input_data,/opt/conda/envs/rs/lib/python3.8/site-packages/sentinelhub/sentinelhub_statistical.py in body(request_bounds, request_data, aggregation, calculations, other_args)

70 for input_data_payload in request_data:

71 if ‘dataFilter’ not in input_data_payload:

—> 72 input_data_payload[‘dataFilter’] = {}

73

74 if calculations is None:TypeError: list indices must be integers or slices, not str

tmp

When I run the statistics as shown in the documentation at the beginning, with only the bounding box, it worked , but seems like I got results per bounding box and not per polygon.

My questions are:

-

Why do I get this error? I’m not sure I understand what is the list of indices mentioned in the error.

-

Is there any way to run this as shown in the first option of the documentation, with the one bbox, but instead of bbox, use one polygon? (similar to masking the sat image to get only pixels inside the polygon)

-

Is there any way I can apply the processing of sentinel 1 inside the statistics? for example. select geometric correction and speckle filter (lee 7X7)

-

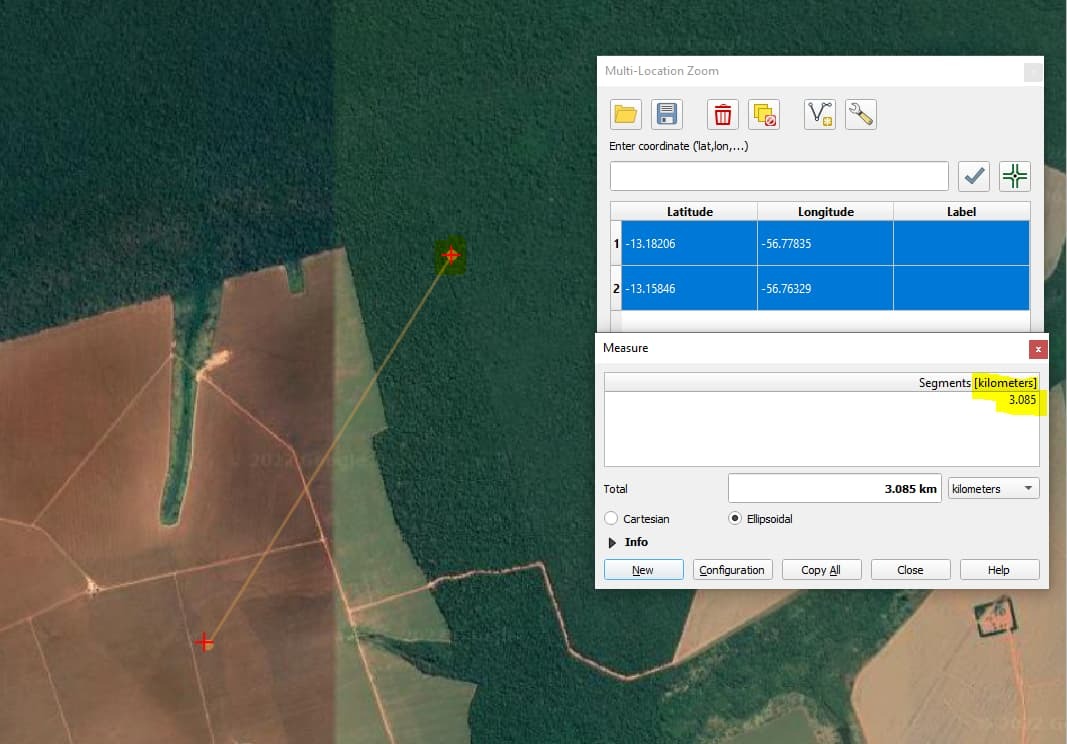

do I need to change to another projection in order to get correct results? I can check for this case the espg in utm, but I want this part to be automatic. I saw some function in sentinel hub that seem to be able to convert from wgs to utm,but seems like is specific coordinates but not polygon, and I don’t want to search every time the specific utm. I saw something similar to this in the documentation of large area (osm splitter?)

Edit for question 4: I found I can use geopandas function .estimate_utm_crs() , if it helps to anyone who has gotten here 🙂