Background

- I want to process S1 GRD data for large areas(~700 sq.km) each for about 10 locations. So that is 7000sq. km. worth of data twice a week.

- I would like to preferably use Python and if possible process everything through SH platform.

- I have very limited experience with SAR data. I have mainly worked with optical data in the past.

Data and Processing Specifications:

- I am using S1 IW GRD HiRes product (10m/px) data with orthorectification

- Once the orthorectified data is available, I am applying some form of speckle filtering on these images and then some thresholding technique for object recognition.

- I would like to store these pre-processed images somewhere in the cloud for later access for training data

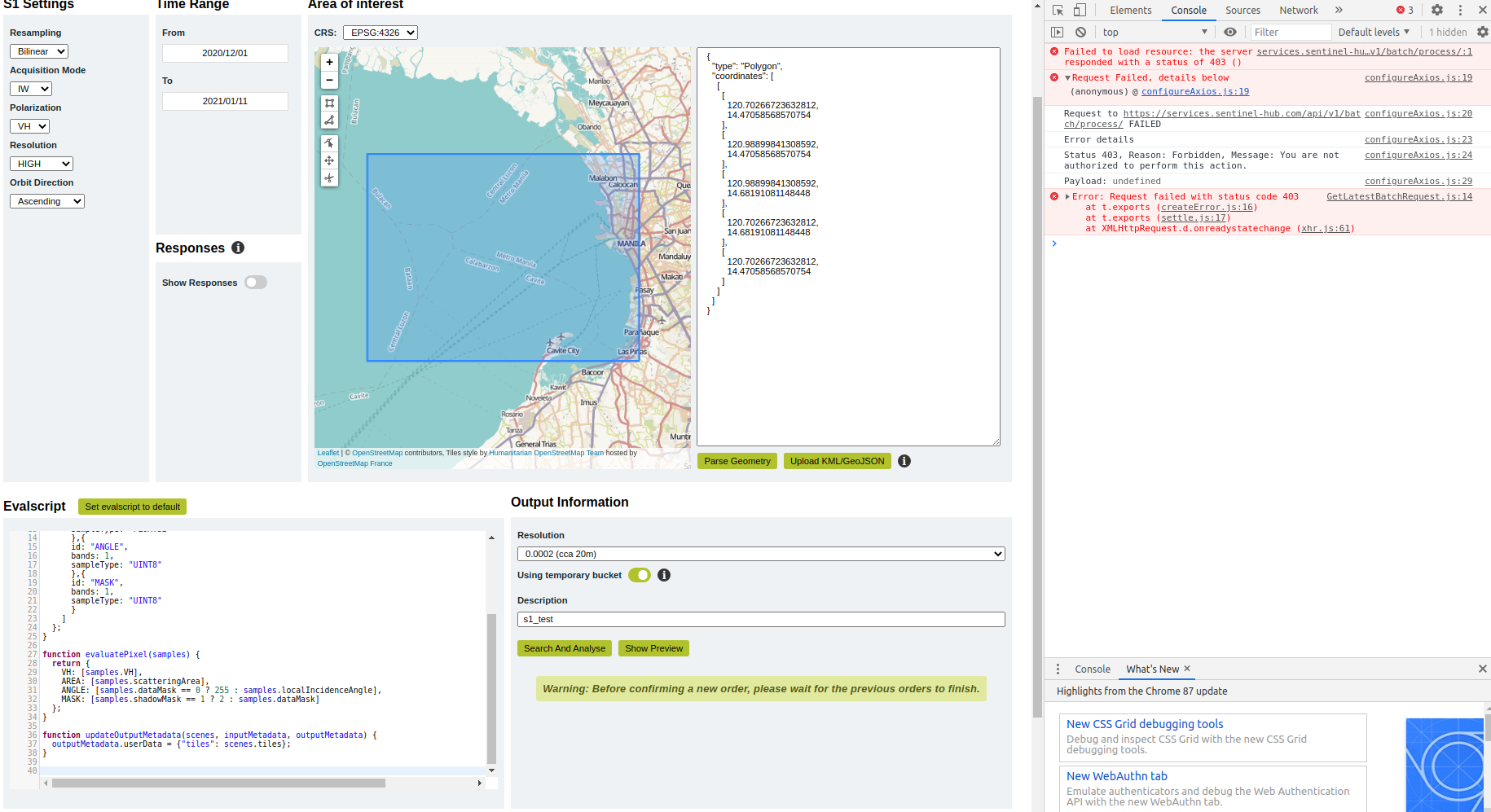

What I have tried so far:

- Simple CURL request through Python. Ran into issues downloading the geotiff images to work with on my local machine. I am assuming this is some syntax error on my part but this is probably not the best approach to achieve what I am doing

- SH python SDK with process API. Ran into an issue with the area being too large.

Questions:

- I should be using batch processing API for this if I understand correctly, is that a correct assumption?

- Is there a way to store the pre-processed images on AWS s3 or GCS after the batch-processing API directly from the SH platform?

- Am I missing anything else here that I should be considering?

Thank you in advance. - Chinmay