Hello.

I’m trying to figure out the difference between L2A products downloaded from Planetary Computer service vs SentinelHub service.

What I do:

I’m trying to download MSI GeoTIFFs for the same polygon and the same date and gets totally different products.

I understand SH is “under the hood” using some processing + mosaic to provide the whole Area Of Interest without empty parts of the original product. The most concerning are different value ranges for both products. Planetary Computer in terms of values seems much more similar to data from Copernicus Hub which is quite raw.

Do you know if I can somehow be able to convert values for both data providers to get the same or even similar distribution / values range?

SH script is simple:

ALL = """

//VERSION=3

function setup() {

return {

input: [{

bands: ["B01","B02","B03","B04","B05","B06","B07","B08","B8A","B09","B11","B12", "SCL"],

units: "DN"

}],

output: {

bands: 13,

sampleType: "INT16"

}

};

}

function evaluatePixel(sample) {

return [sample.B01,

sample.B02,

sample.B03,

sample.B04,

sample.B05,

sample.B06,

sample.B07,

sample.B08,

sample.B8A,

sample.B09,

sample.B1972,

sample.B12,

sample.SCL];

}

"""

Planetary Computer stackstac request isn’t involving any special treatment except NaN handling as well:

data = (

stackstac.stack(

signed_items,

epsg=self.default_epsg, #4326

resolution=self.default_raster_resolution, #10

assets=assets, #MSI bands list

chunksize=self.default_chunksize,

bounds_latlon=bbox,

)

.where(lambda x: x > 0, other=np.nan)

.assign_coords(band=assets)

)

return data

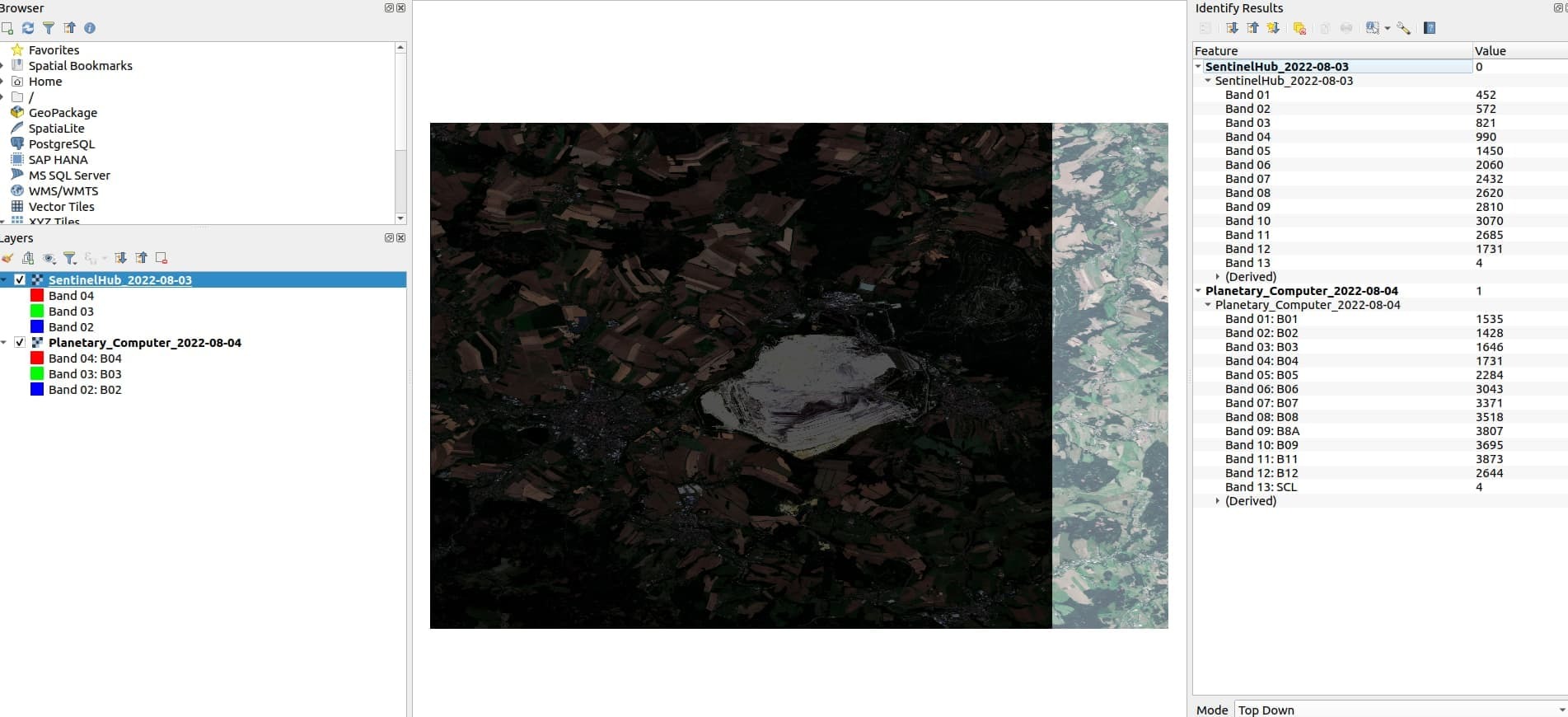

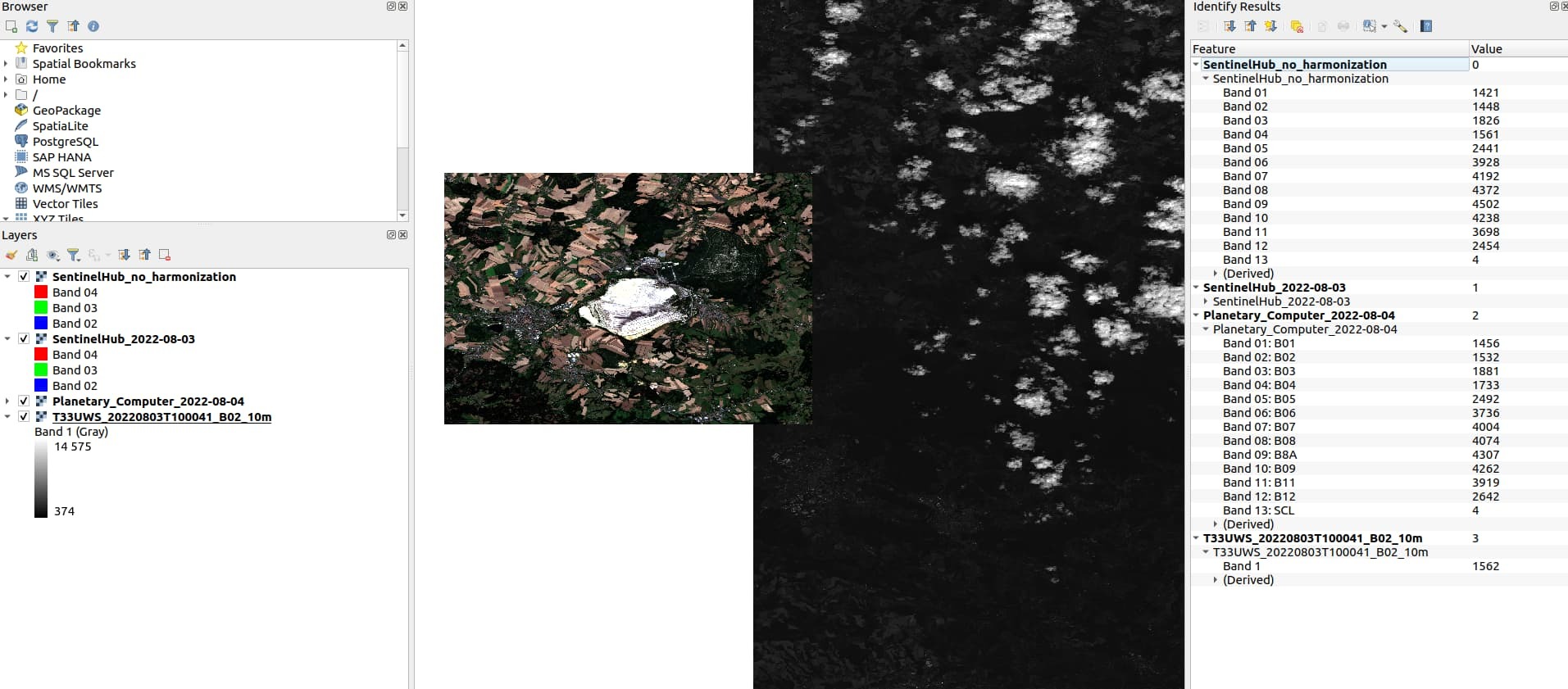

Data preview for both products in QGIS:

Thanks for your time!