Hi !

I’m always working on my script for temporal analysis. But i’m stuck because of slowness of .get_cloud_masks command on large area.

I use BboxSplitter to split my very big area and to have bbox under 5000 pixel height or large:

largeur = int(np.ceil((Xmax_utm - Xmin_utm)/10)) # Calcul de la largeur de l'image

hauteur = int(np.ceil((Ymax_utm - Ymin_utm)/10)) # Calcul de la hauteur de l'image

print('\nArea pixel size: {0} x {1}'.format(largeur,hauteur))

>>>Area pixel size: 9968 x 7245

if largeur > 5000 or hauteur > 5000: # Si la largeur ou la hauteur depasse 5000 pixels

if largeur > 5000:

L = int(np.ceil(largeur/5000))

print('%s cells wide' % (L))

else:

L = 1

if hauteur > 5000:

H = int(np.ceil(hauteur/5000))

print('%s cells high' % (H))

else:

H = 1

>>>2 cells wide

>>>2 cells high

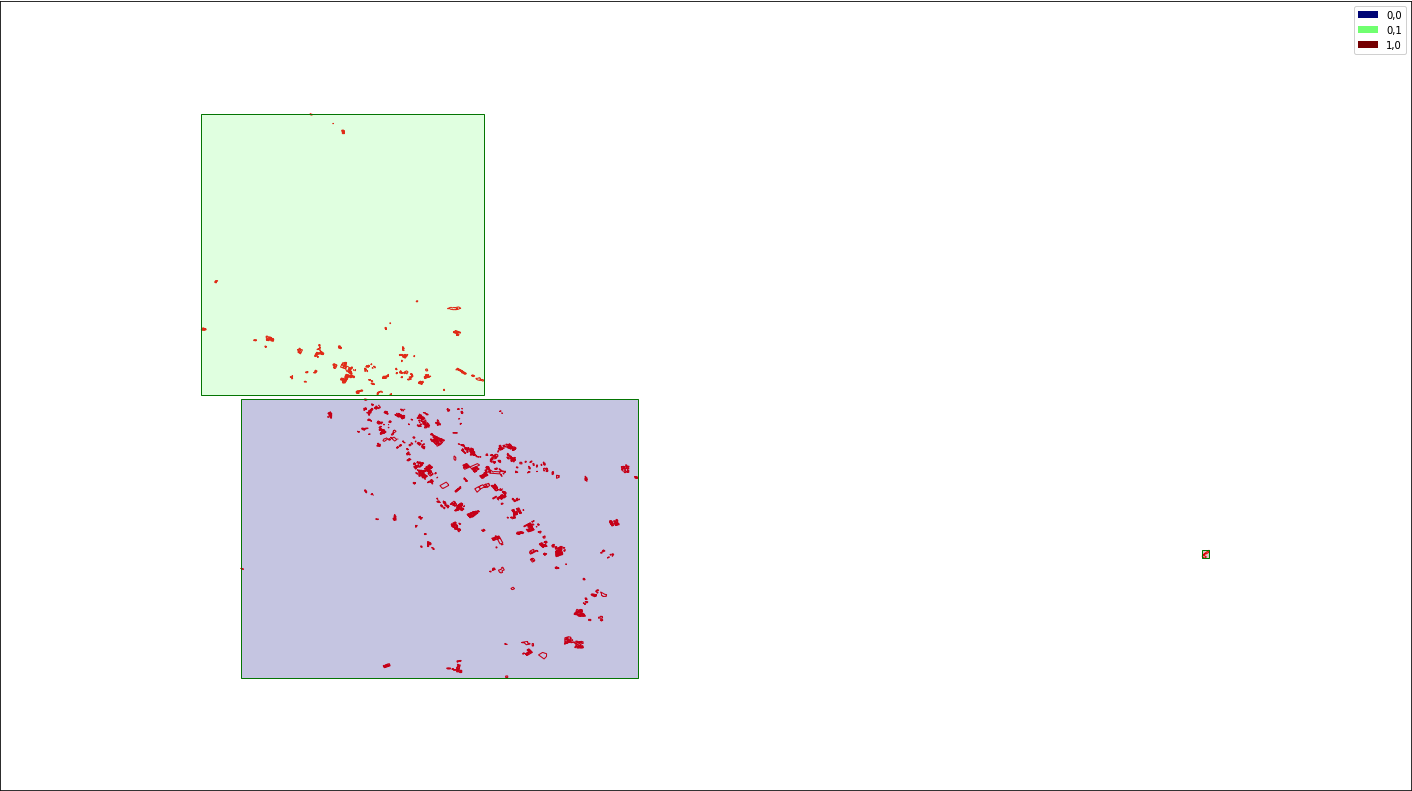

Here is an illustration:

I’m testing it on only 3 dates and it’s already long, so I can’t imagine on 3 years…

Do you have an idea to accelerate it ?