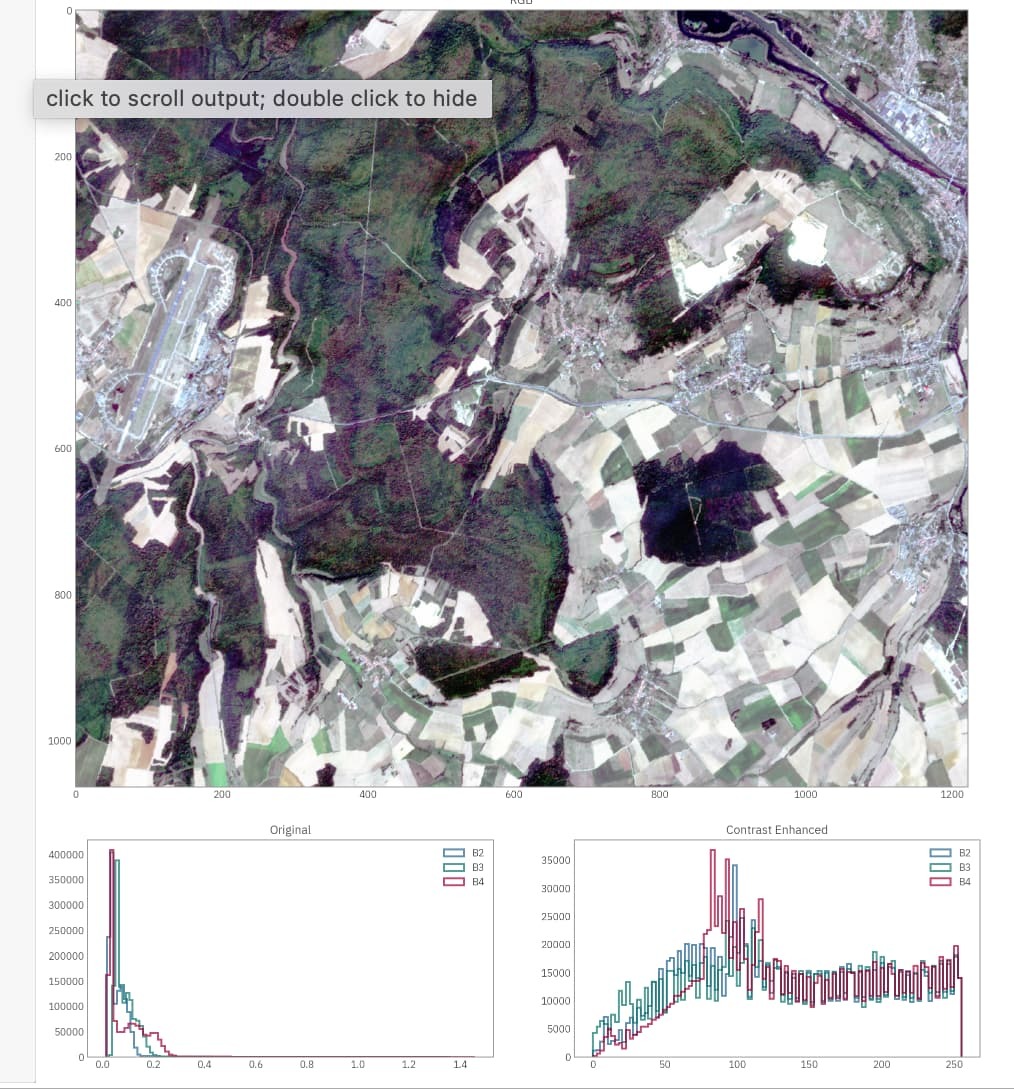

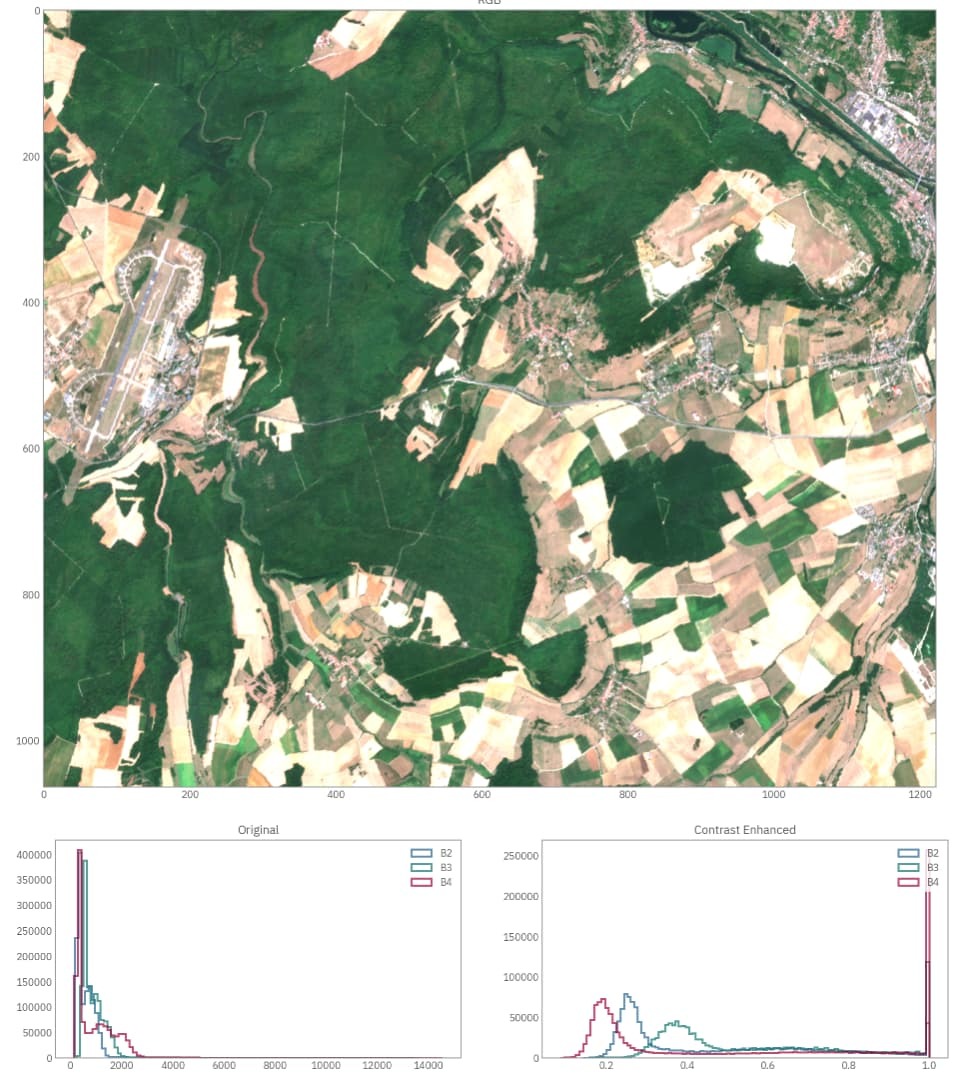

I am trying to use the sentinel-2 L2A images downloaded from sentinel-hub for a deep learning application on RGB images. However, each image has different brightness levels and that also depends on the area of interest I export. Is there a consistent way to retrieve RGB images for directly feeding to the ML model?

Does the sampling of pixel colors vary depending on the area of interest (Large area vs small area (10 km2)?