Hello all,

I have been working through the LULC python pipeline that uses a combination of eo-learn and sentinelhub.

Does anyone have recommendations to reduce using processing units while testing the code?

While learning these tools I chose an area about 1000 square km and after two attempts with the pipeline code have used 20,000 processing units and have no idea where the EOpatches are being saved. Something is being processed and it takes about 30 minutes to run, but after there are no ‘results’ and the output folder created remains empty. In the error report all executions failed with:

KeyError: “During execution of task VectorToRaster: ‘lulcid’”

I am looking to use google colab, so that may be an additional hurdle.

I am not concerned about fixing a specific problem, but rather generally avoiding burning through a month of processing units with mistakes when implementing on a large scale.

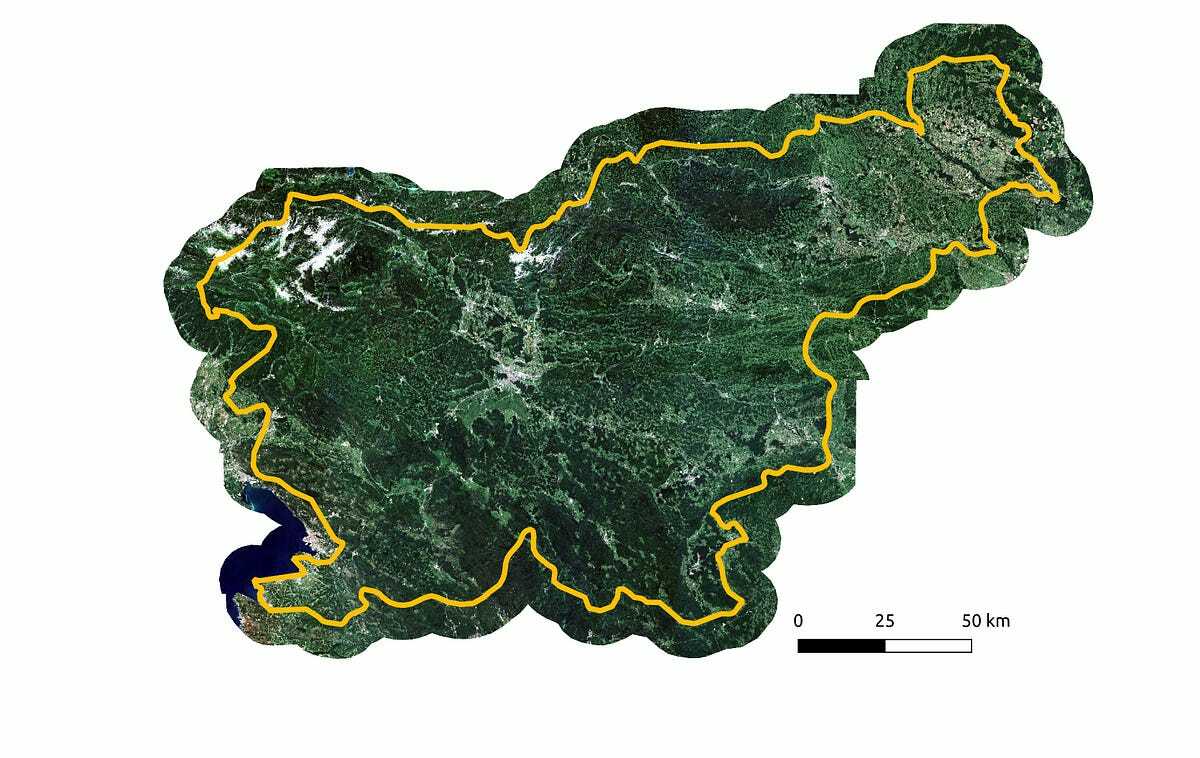

Is there a way to prompt the user during a workflow like the example for land use in Slovenia or other repetitive tasks using large amounts of processing units to stop after an error in the first execution batch? Requests and mosaicking work fine for my AOI. Are significantly more processing units needed when splitting the same area first with the bboxsplitter?