Hello to all.

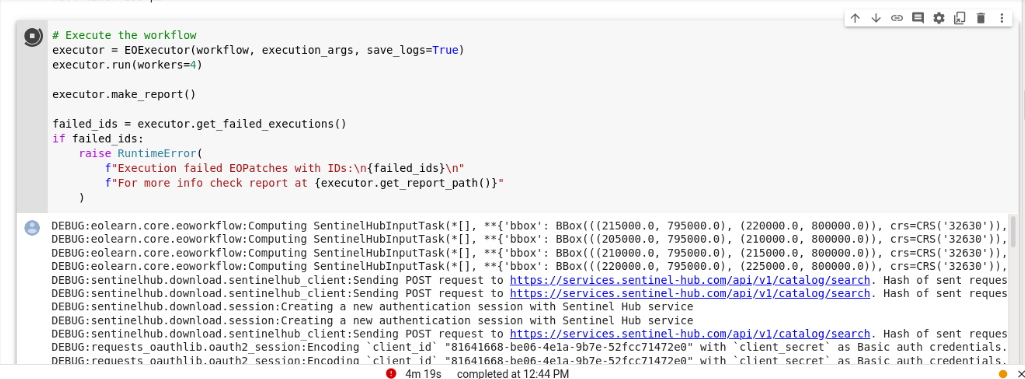

For a long time I have been running the code from the Slovenia example without issue. But for quite a while now, I’ve been seeing endless DEBUG errors without being able to download. I have tried reducing my study area and interval time to no avail.

Can you help me to solve this problem ?