Hi, I’m using Python and sentinelhub-py version 2.4.1 to fetch the full Sentinel2 image history of approx. 300 agricultural fields. I know this will put a large load on the web-service which may return HTTP 500 errors due to the load. I have tried to handle such errors by setting the following sentinelhub-py settings:

from sentinelhub.config import SHConfig

sh_config = SHConfig()

sh_config.max_download_attempt = 5

sh_config.download_sleep_time = 60

sh_config.download_timeout_seconds = 30

However, using these settings I now get the following HTTP 500 error for multiple of my requests:

Exception: DownloadFailedException('Failed to download with HTTPError:\n500 Server Error: Internal Server Error for url: https://services.sentinel-hub.com/ogc/wms/[INSTANCE_ID]?SERVICE=wms&BBOX=507395.0%2C6085280.0%2C507610.0%2C6085775.0&FORMAT=image%2Ftiff%3Bdepth%3D32f&CRS=EPSG%3A32632&WIDTH=43&HEIGHT=99&LAYERS=S2-L2A&REQUEST=GetMap&TIME=2018-10-10T08%3A40%3A18%2F2018-10-10T12%3A40%3A18&MAXCC=5.0&Downsampling=BILINEAR&Upsampling=BILINEAR&Transparent=False&ShowLogo=False\nServer response: "Out of retries"',)

I cannot find any documentation of this “Out of retries” error. I tried to switch IP but I still get it, so I guess that the error is bound to my INSTANCE_ID.

Is there any documentation of this HTTP error and how long is my INSTANCE_ID “locked” from performing this request?

Additinal information:

It might be helpful to know I also receive other types of HTTP 500 errors, which I also cannot understand the reason of:

- Exception: DownloadFailedException(‘Failed to download with HTTPError:\n500 Server Error: Internal Server Error for url: https://services.sentinel-hub.com/ogc/wms/[INSTANCE_ID]?SERVICE=wms&BBOX=518235.0%2C6091465.0%2C518655.0%2C6091945.0&FORMAT=image%2Ftiff%3Bdepth%3D32f&CRS=EPSG%3A32632&WIDTH=84&HEIGHT=96&LAYERS=S2-L2A&REQUEST=GetMap&TIME=2018-10-10T08%3A40%3A18%2F2018-10-10T12%3A40%3A18&MAXCC=5.0&Downsampling=BILINEAR&Upsampling=BILINEAR&Transparent=False&ShowLogo=False\nServer response: “java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Total timeout 3000 ms elapsed”’,)

- Exception: DownloadFailedException(‘Failed to download with HTTPError:\n500 Server Error: Internal Server Error for url: https://services.sentinel-hub.com/ogc/wms/[INSTANCE_ID]?SERVICE=wms&BBOX=602330.0%2C6157140.0%2C603005.0%2C6157875.0&FORMAT=image%2Ftiff%3Bdepth%3D32f&CRS=EPSG%3A32632&WIDTH=135&HEIGHT=147&LAYERS=S2-L2A&REQUEST=GetMap&TIME=2018-10-10T08%3A40%3A18%2F2018-10-10T12%3A40%3A18&MAXCC=5.0&Downsampling=BILINEAR&Upsampling=BILINEAR&Transparent=False&ShowLogo=False\nServer response: “java.util.concurrent.ExecutionException: java.lang.IllegalArgumentException”’,)

Regards Peter Fogh

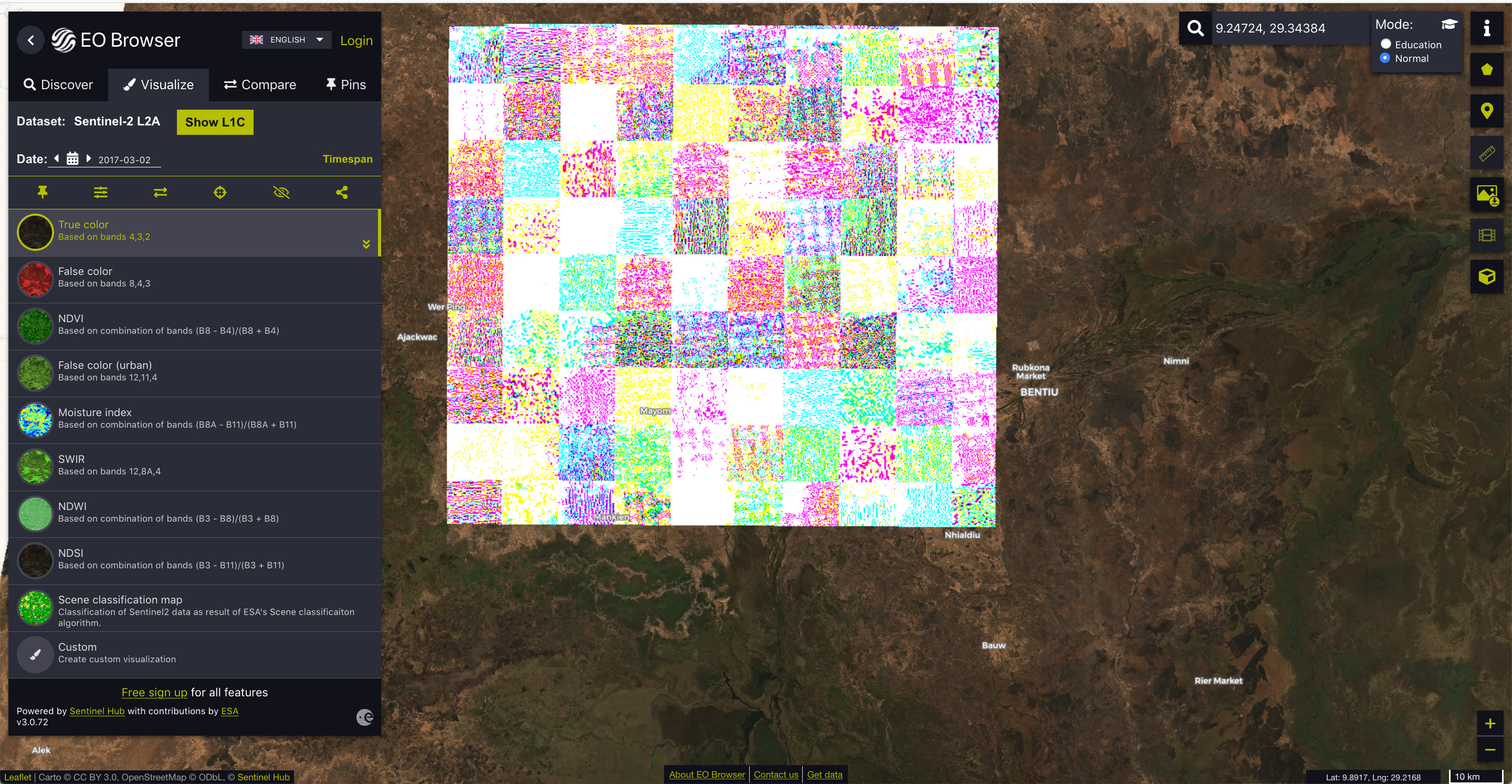

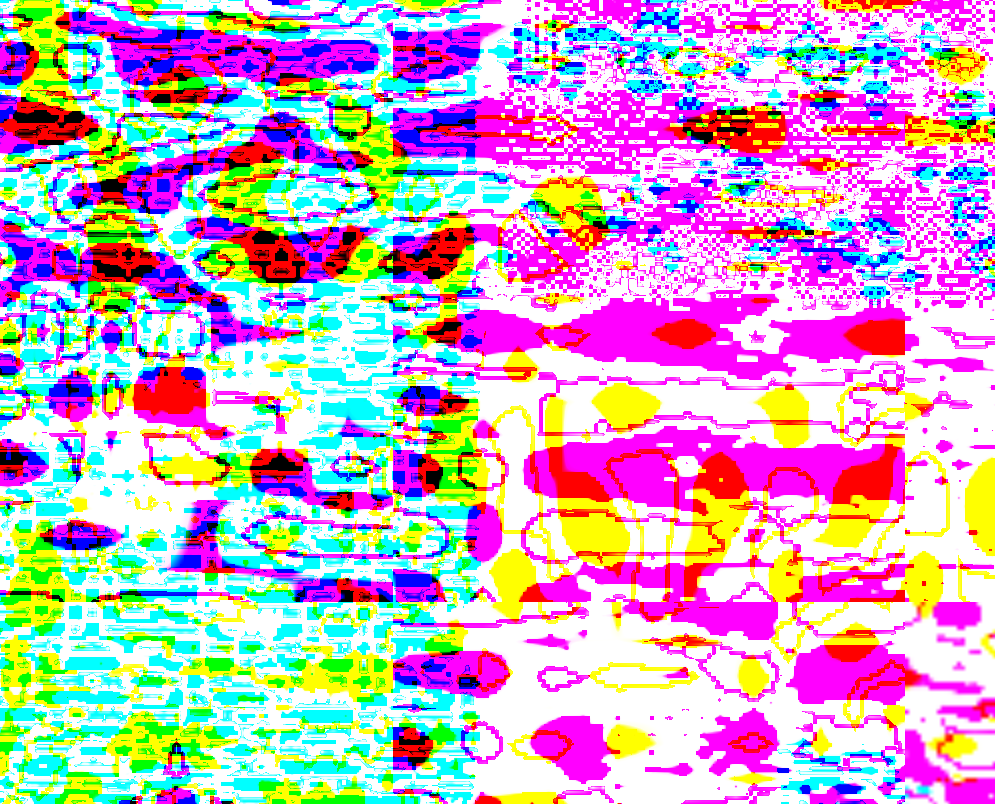

UPDATE

After looking more at the errors, I see that all of my received errors are for requests at the date “2018-10-10T08%3A40%3A18%2F2018-10-10T12%3A40%3A18” (i.e. 2018-10-10T08:40:18/2018-10-10T12:40:18), so I suspect that SentinelHub has and internal error of processing the data from this date?