I am experimenting with the histogram calculations for a dataset that has year information per pixel.

The binning conceptually works nicely in order to evaluate how many pixels are available for each year.

The problem is using either width and height, or resx and resy i can’t manage to hit exactly the native resolution for a arbitrary bounds selection.

I am not sure what kind of resampling is done, but the year values are “corrupted” through the resampling, no longer showing expected range, but actually showing maxima of easily 100 years in the future.

Is there a way to set the resampling approach to at least nearest neighbor to make sure the values stay within the original range?

Or as maybe better alternative is there a way to make sure the exact native resolution is used for the statistical evaluation so that no resampling is needed at all?

- Home

- Community overview

- Develop

- Analysis APIs

- Is there a way of specifying the resampling approach in the statistical api?

Is there a way of specifying the resampling approach in the statistical api?

- April 26, 2024

- 16 replies

- 189 views

16 replies

- April 26, 2024

The resampling can be defined using upsampling or downsampling parameter.

If you want to “exactly” hit the native resolution, you should be using the same CRS (i.e. UTM with appropriate zone for Sentinel-2), then choose the same resolution (in the same CRS…).

- Author

- April 26, 2024

Thank you for the quick reply. The link is for the resampling is for processing api? Specifically for Sentinel-2 L2A?. I don’t see any information for the statistical api on how to use resampling there. Also, does this work for BYOC data?

Trying to be more specific, how could i make sure to get the original resolution or make sure a specific interpolation is done for following statistical api request:

{

"input": {

"bounds": {

"geometry": {

"type": "Polygon",

"coordinates": [[[8.31,44.59],[10.47,44.59], [10.47,46.18],[8.31,46.18],[8.31,44.59]]]

}

},

"data": [

{

"dataFilter": {},

"type": "byoc-someidentifier"

}

]

},

"aggregation": {

"timeRange": {

"from": "2010-11-01T00:00:00Z",

"to": "2010-12-01T00:00:00Z"

},

"aggregationInterval": {

"of": "P1D"

},

"resx": how to define native resolution?,

"resy": how to define native resolution?,

"evalscript": "evalscript to extract data"

},

"calculations": {

"data":{

"histograms": {

"default": {

"bins": [1999, 2000, 2001, 2002, 2003, 2004]

}

}

}

}

}

I know using year values in the data is maybe not the most typical use case, but if we had for example temperature information in celsius, going from -50 to 50 and when applying the statistics it show a max value of 80 for the selected area because of what happens in aggregation/resampling, i think this makes the statistical api not so reliable to use.

- April 26, 2024

Hi,

As the data you are using is a BYOC collection, we do not know what the native projection or native resolution is. As this is your dataset, then I assume you know the native resolution and projection of your dataset. You can apply the same logic to your Statistical API request as the example that Grega provided.

Below I have put together an example using Statistical API, please note how the CRS is set in the properties part of the request "crs": "http://www.opengis.net/def/crs/EPSG/0/32601" and the resolution is set as resx and resy instead of using the size of the image as the input. It’s important to remember the resolution units are the same as the CRS used in your request. e.g. if it is a UTM zone then it is in metres but if it is WGS84 then this would be in degrees.

{

"input": {

"bounds": {

"bbox": [

-284343.074271,

15317212.842314,

-292181.952771,

15311099.017774

],

"properties": {

"crs": "http://www.opengis.net/def/crs/EPSG/0/32601"

}

},

"data": [

{

"dataFilter": {},

"processing": {

"downsampling": "BILINEAR"

},

"type": "sentinel-2-l2a"

}

]

},

"aggregation": {

"timeRange": {

"from": "2024-01-08T00:00:00Z",

"to": "2024-02-08T23:59:59Z"

},

"aggregationInterval": {

"of": "P10D"

},

"resx": "10",

"resy": "10",

"evalscript": "//VERSION=3\nfunction setup() {\n return {\n input: [{\n bands: [\n \"B04\",\n \"B08\",\n \"SCL\",\n \"dataMask\"\n ]\n }],\n output: [\n {\n id: \"data\",\n bands: 3\n },\n {\n id: \"scl\",\n sampleType: \"INT8\",\n bands: 1\n },\n {\n id: \"dataMask\",\n bands: 1\n }]\n };\n}\n\nfunction evaluatePixel(samples) {\n let index = (samples.B08 - samples.B04) / (samples.B08+samples.B04);\n return {\n data: [index, samples.B08, samples.B04],\n dataMask: [samples.dataMask],\n scl: [samples.SCL]\n };\n}\n"

},

"calculations": {

"default": {}

}

}

This should make this clearer to you, if not then please let us know.

- Author

- April 26, 2024

Hi, thanks also for the quick reply, i think it is clear now, i will test it out when i have a chance.

Maybe just to close also on the question of the thread title, i guess there is no way to specify resampling method in the statistical api? Might be interesting it resampling is still needed because of a large area request.

- April 26, 2024

I’ve updated the example above to included a downsampling of the data. You can also specify upsampling depending on your use case. You have a choice of NEAREST, BILINEAR or BICUBIC as documented here

- Author

- April 26, 2024

Ah! It is within the data property, i did not find that when looking for it, great.

So i think all questions are answered, so feel free to close topic as resolved, thanks!

- Author

- April 26, 2024

Hello again, i played around with the up- and downsampling to try and understand why i get so strange values, but i am quite confused about the results.

Using the exact same request, only changing the up- and downsampling i get following results:

NEAREST:

"bands": {

"B0": {

"stats": {

"min": 0.0,

"max": 2045.0,

BILINEAR:

"max": 1993.0,

BICUBIC:

"max": 2083.0,

in theory the data should only have values from 1985 to 2015 (plus the 0 values). I do not understand how the statistical api return such high values depending on the interpolation, could you help me understand this?

- April 26, 2024

I don’t know enough about your dataset to be able to help you intepret your results. It might be worth your time investigating the source data which I assume was processed by yourself to understand where the unexpected values are coming from. It might also be worth checking if you are using the appropiate SampleType in your request. You can read more about this here.

- Author

- April 26, 2024

Hi, it is a 1 band UINT16 cog with values 0, 1985 to 2015. I am requesting UINT16 also as sample type.

I can only think that these strange values are appearing when creating the “result” raster on SH on which then the statistics are calculated from, so i personally think the problem is in that step. This idea seems to be backed up because different interpolation methods provide different max values in the “result” data values for the same original data retrieved.

I will still double check the dataset again to make sure.

- Author

- April 26, 2024

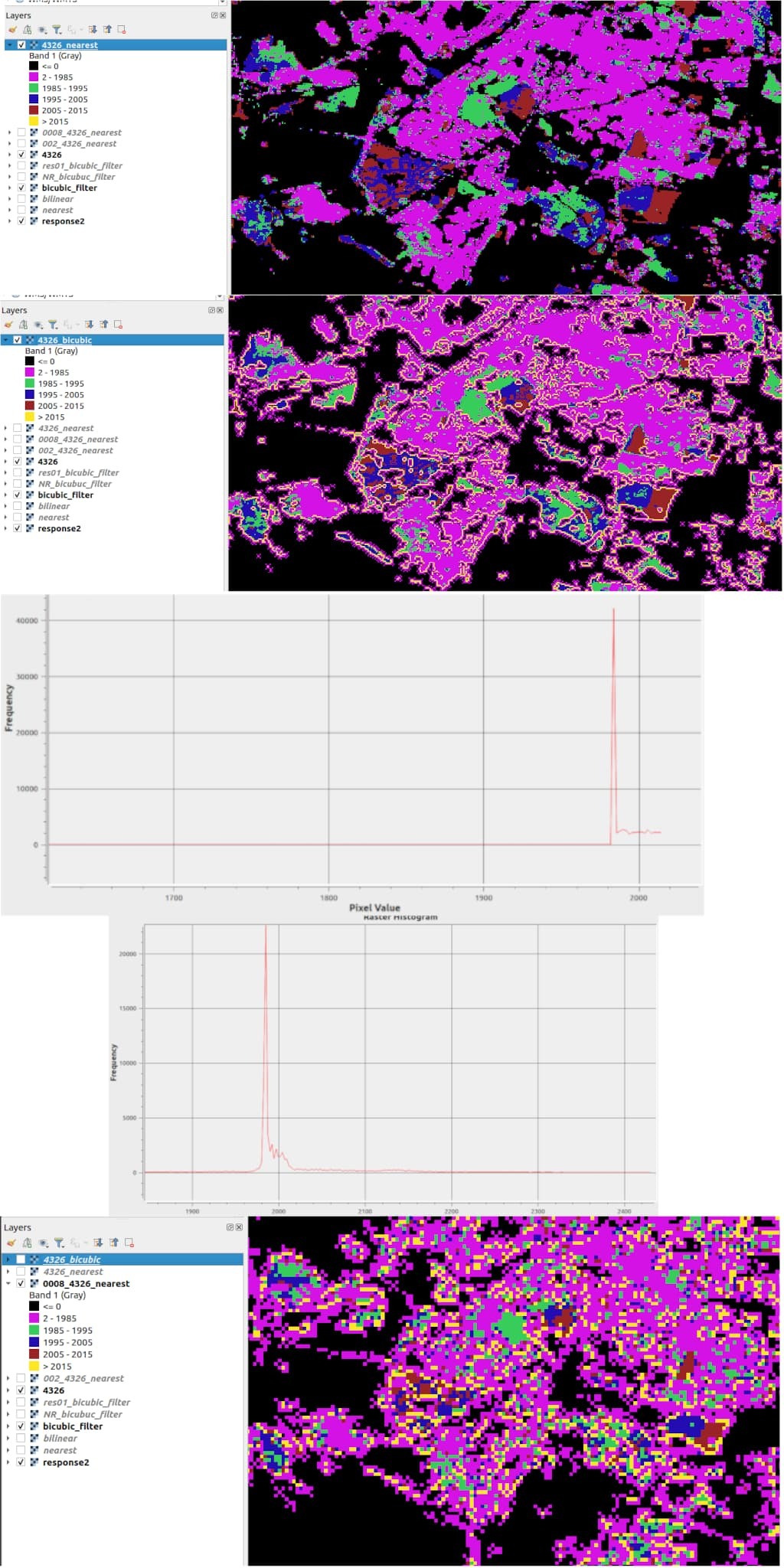

Hello again, so, i thought to myself i could try out the process api in order to understand the “result” raster that is created and i must say, i don’t understand what sentinelhub is returning.

Here are some of the results.

I know the resolution is 0.000269494585236 in lat and long for 4326 projection. If i request that resolution and use NEAREST sampling, i get the result i would expect:

If i use bicubic sampling (which in my opinion should not make any difference as i should be getting the native resolution) i get a ton of artifacts:

With artifacts i mean values above 2015 which is in theory the maximum of the original data.

Here is the histogram for the nearest dataset:

here for the bicubic:

I thought, ok, as long as i stick to nearest i should be ok, but if i reduce the resolution by a little, lets say to 0.0008 using nearest i get the following:

with basically the same histogram as for the previous bicubic one.

So i am really at a loss on how to trust the results of these endpoints, maybe i am overseeing something obvious?

When trying to reply it said i can only have one image, so i combined all here, should be in order as i mention them in the text, hopefully it is clear:

- April 26, 2024

-

Bicubic resampling uses smoothing algorithms to interpolate the data and I would not use it on a discrete dataset such as yours. For this type of data, I would not deviate from nearest sampling. This post probably explains it better than me: Raster Resampling for Discrete and Continuous Data - GIS Geography

-

In addition, Sentinel Hub APIs were designed for the processing and analysis of satellite imagery and not discrete datasets such as yours. It doesn’t mean that these types of data can’t be used with the APIs but because satellite imagery is a continuous raster dataset and yours is discrete.

You can also try experimenting with resampling your dataset using the different methods in QGIS and see if you get the same result here as you do in Sentinel Hub.

- Author

- April 26, 2024

As described in the link in point 1. “Because nearest neighbor resampling doesn’t alter any values in the output raster data set, it is ideal for categorical, nominal, and ordinal data.”

So the main issue i am describing is that using the NEAREST returns values that are not within the original dataset, so i would say something is wrong with that resampling method in the implementation.

- April 26, 2024

Is the “original dataset” L2A or is some COG loaded as BYOC?

If the latter, you need to take into account that interpolation is happening also when you are COG-ifying the product.

We are pretty confident about the implementation.

If you provide an exact request and your findings, we might take a look, when some of the engineers have time.

- Author

- April 26, 2024

The original dataset is a byoc cog, as i explained in the thread, if i request the native resolution with NEAREST i get the correct original data (within the expected data range, as can also be seen in the screenshots) if i however only change the resolution in the request (to a lower resolution) keeping the NEAREST sampling i get values completely outside the original data range.

Quick consideration, writing this down, I imagine it is possible that when fetching lower resolution your actually taking the values from overviews? If those overviews were generated with another type of interpolation i imagine that might explain things.

*edit: just realized this is what you meant with “interpolation is happening also when you are COG-ifying the product”

- April 26, 2024

We are indeed using overview layers of the COG… this is why they are for (the main benefit of COG!). So you should make sure that overview layers are as well created with nearest neighbour (or similar).

Our example recommends AVERAGE, which should not distort values, assuming they are not a classifier or similar.

- Author

- April 26, 2024

I think that explains the behavior quite well, as i was not the person doing the data ingestion i need to coordinate with them, but i think we found the underlying issue, thank you for the support!

Maybe it would be good to point this out in the documentation for the resampling property, something in the lines of:

Info: the process accesses data from overviews if available, if a different resampling method was used for their creation the configured approach might not provide the expected results

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.

Scanning file for viruses.

Sorry, we're still checking this file's contents to make sure it's safe to download. Please try again in a few minutes.

OKThis file cannot be downloaded

Sorry, our virus scanner detected that this file isn't safe to download.

OK

©

2025

Planet Labs PBC. All rights reserved.

|

Privacy Policy

|

California Privacy Notice

|

California Do Not Sell

Your Privacy Choices

|

Cookie Notice

|

Terms of Use