We are having problem with inconsistency with downloading scene tiles. It seems that imagery is available on ESA site but not on AWS very frequently.

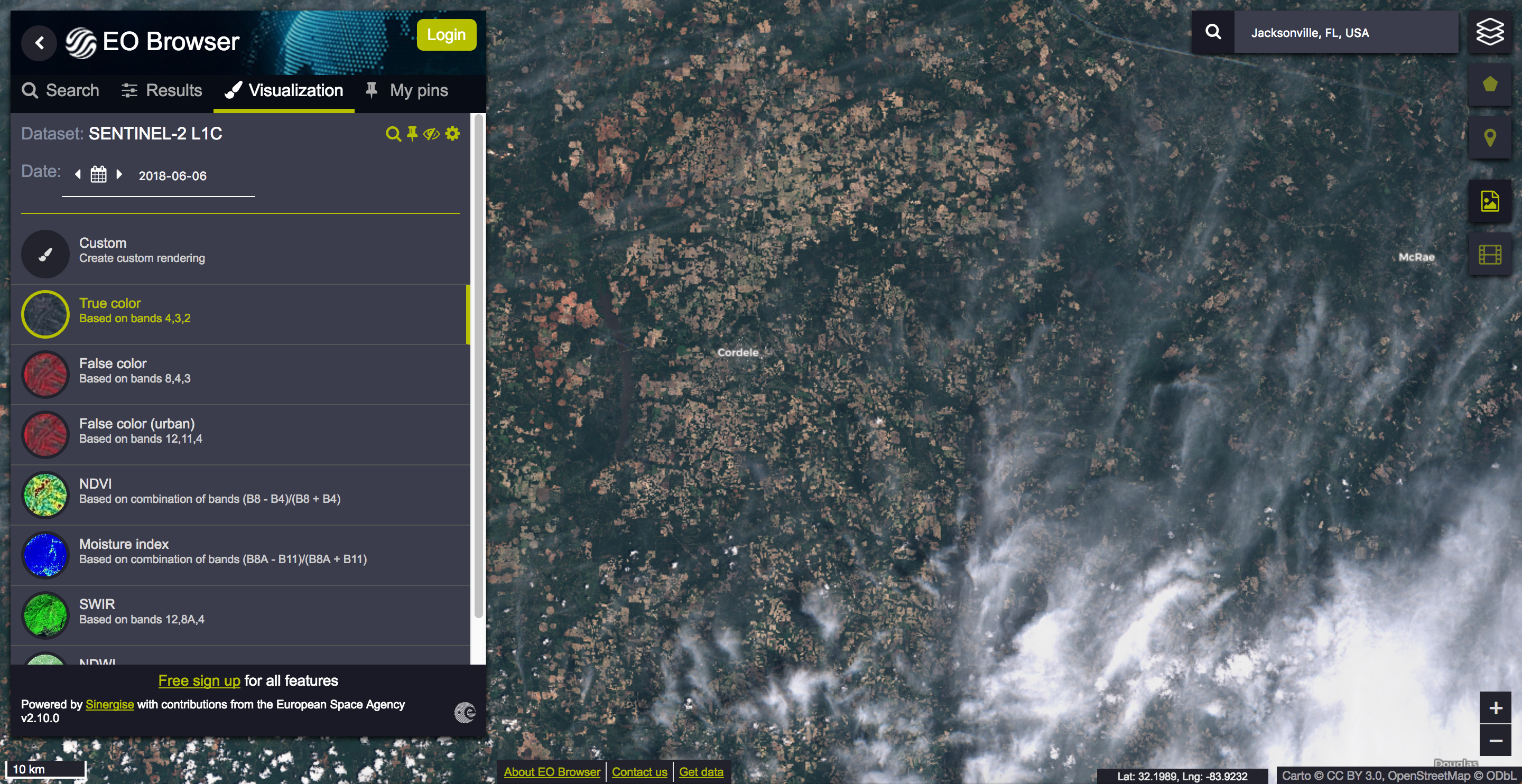

The current issue is scene tiles 17SKR and surrounding scenes on 6/6/18 and 6/11/18. They do show up in quicklooks but cannot access through our script.