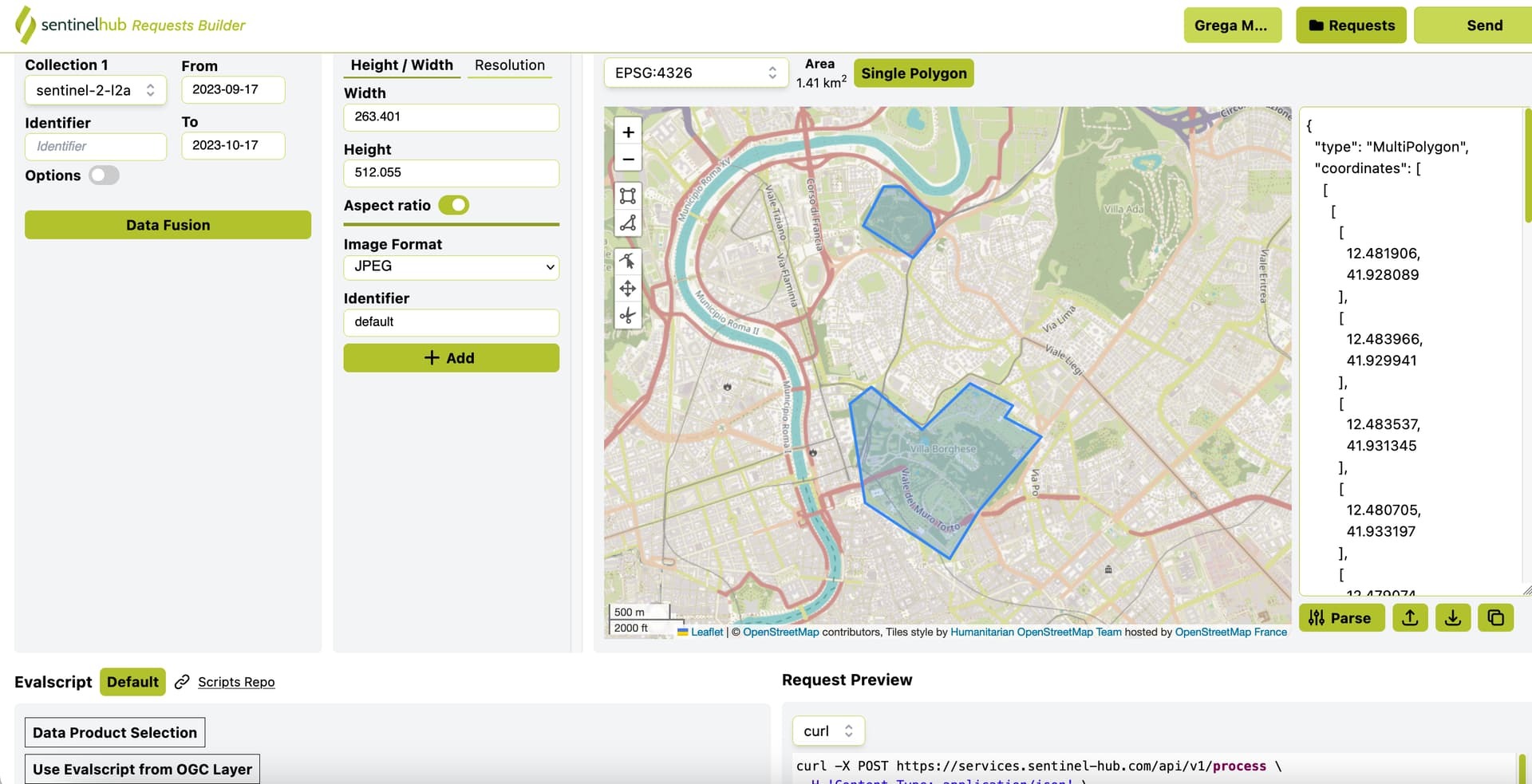

I am using process API [Preformatted text](https://services.sentinel-hub.com/api/v1/process) but I have to fetch the data for 500 places in less than 30 seconds. So according to my knowledge, SentinalHub doesn’t support multiple (png/jpeg) images in 1 request. So we have to loop the coordinates and hit the API for each image.

Problem: The issue is API fails and returns the Rate Limit exception.

Is there any way through which we can hit 500 requests in less than 30 sec, or hit a simple call and get 500 images?

Our request payload is below:

const input = {

input: {

bounds: {

properties: {

crs: "http://www.opengis.net/def/crs/OGC/1.3/CRS84",

},

geometry: {

type: "Polygon",

coordinates: [coordinates],

},

},

data: [

{

type: "S2L2A",

dataFilter: {

timeRange,

mosaickingOrder: "leastCC",

},

},

],

},

output: {

width: w,

height: basSize,

responses: [

{

identifier: "ndvi_image",

format: {

type: "image/png",

quality: 10,

},

},

],

},

evalscript: ndviScale,

};